About the author

Giuseppe Maxia is a QA developer in MySQL community team.

A system analyst with 20 years of IT experience, he has worked as a database consultant and designer for several years.

He is a frequent speaker at open source events and he's the author of many articles.

He lives in Sardinia (Italy).

MySQL as universal server

MySQL is the most popular open source database server in the world. As such, it must be enough to satisfy the needs of most of its users. But users don't have only needs. They have wishes. And they wish to have a more powerful, more feature rich database server, and that's why MySQL developers are working at new features in 5.1 and 6.0.

Unfortunately, users don't want more power in newest versions. They want more power today.

Using a proxy server, you can make some of your dreams come true:

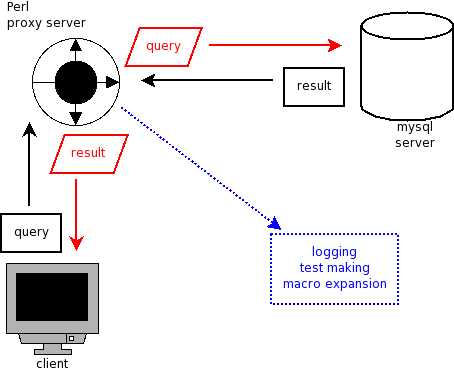

- Implement customized logging in the same server or remotely;

- Automatically generate tests on demand;

- Implement macros for the most common SQL expressions;

- Let your database server execute shell commands and use email;

- best of all: use other database server tables within MySQL!

Can you really do all of the above today? Absolutely.

No need to switch to a alpha stage development server? Nope. Just a plain 5.0 GA would do.

Do you need to compile something? No. The only thing you need is Perl and a couple of commonly available modules.

Introducing DBIx::MyServer

Philip Stoev has written DBIx::MyServer, a Perl module that implements MySQL client/server protocol in Perl.

In plain words, this module can act as a server to any mysql client, meaning that it can accept requests and it knows how to send answers back. If your application can negotiate a dialog to a MySQL server, it can also talk to this specific server.

Thus you can use a MySQL client as interface to read file-system directories, list email headers, get contents from the web, browse Perl data structures. You name it. Anything you could do in Perl you could see through a MySQL interface.

Of course, the server would not be of much usage if it could not act as a man-in-the-middle (or, more appropriately, server-in-the-middle), i.e get a query from a client, pass it to the server, get the results from the server, return them to the client. And what is the advantage, you may ask, other than delaying the data a few microseconds?

The main advantage is that the proxy server can manipulate the query before sending it to the server, and eventually it can modify the results before sending them, back to the client.

For example, you can enforce all GRANT queries to have a password, and you can prevent users from setting empty or weak passwords.

Or you can replace the contents of the 'password' column, so that it will be shown as a string of asterisks.

Since the server is written in Perl, and it's object oriented, adding a feature by sub-classing the server class takes just a few minutes. Implementing a set of macros or just a whole new language with relative features is just plainly easy.

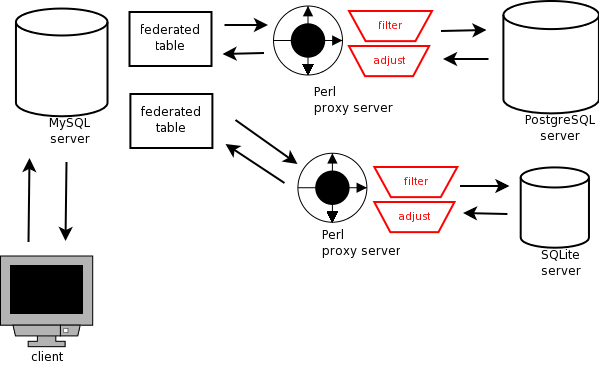

Moreover, since Perl, through the DBI, can connect to any database server, new horizons open for you. You can use any database server as data source for a MySQL Federated table. In other words, you can use a PostgreSQL/Oracle/SQl Server/SQLite table, and a MySQL table in the same query, from a MySQL client, which will see them as normal tables.

Science fiction? Not at all. It's doable now!

Installation

Installing a Perl module with no particularly hard dependencies is quite straightforward. In most operating systems all you need to do is

sudo cpan -i DBIx::MyServer

If that is not supported, there is a general Tutorial on how to install modules and one specific for Win32.

To use this module as a proxy server, you also need Perl Database Interface, better known as the DBI and MySQL specific driver, DBD::mysql.

If you want to use an external DBMS as data source for federated tables, you need the relative DBD module. For this demonstration, I've used the drivers for PostgreSQL and SQLite. You can apply the same method to any database server.

Overview of the proxy server

So, what exactly is a proxy server? In its current implementation, it's a Perl script using the DBIx::MyServer module, which has been instructed to listen to any given port. Out of the box, the module does not do anything special. If its main method (_processCommand()_) is called in a loop, it will just relay requests and results between client and server.

Being an object oriented application, it's easy to subclass the appropriate methods to instruct the server to do something in between. Thus, before sending the query to the intended recipient, the proxy server can modify it, from fixing your most frequent spelling mistakes (CRAETE DATABSEE anyone?) to removing potentially dangerous commands. In the same fashion, the proxy can clean up the results before sending them back.

If you have ever felt constrained by the current client/server limitations, this is a paradigm to consider using, especially for the development and test phase. Using such a system you can have a glimpse inside the hood. More than that, actually. You have your hands on the crank handle, and you can do whatever you want. For example, if you want to restrict a specific piece of information to a group of users, and someone outside the group asks for it, instead of the usual error message, you can have your server returning this:

SELECT secret_data FROM boss_restricted_archive;

+----------+--------------------------------------+

| status | explanation rant |

+----------+--------------------------------------+

| rejected | you sneaky scoundrel! Peeking at the |

| | boss archives! |

| | Kiss your raise goodbye, Joe Curious |

+----------+--------------------------------------+

Your imagination is the limit.

The juicy stuff - Using tables from other DBMS inside MySQL

We can now face the most interesting part. Using tables from other database management systems, other than MySQL, as Federated tables inside MySQL.

If you need to use a table in Oracle, or PostgreSQL, SQL Server, until now the only option would have been importing the data into your database. There are cases, however, where the foreign data changes frequently, and you would need to import the data at very short intervals. If this is the case, instead of importing data, you can just link it to your application, and use it LIVE!

How do we do it?

Now that you know how the proxy server works, it's easy to figure out. It is not your client that uses the proxy server, but your server, when creating and using a Federated table.

If you don't know how Federated tables work, you can look at the manual or at the missing manual.

When accessing a remote table, the Federated engine issues the received query to the remote server, collects the result, and relays it to the client. It is actually another proxy server, hidden within your 5.x MySQL server.

The Federated engine has, however, two limitations:

* it only connects to remote MySQL tables, because it can only speak the MySQL client/server protocol;

* it issues some hidden, undocumented, MySQL-specific queries, which are rejected by non MySQL servers.

Fortunately we've seen that the proxy server can clean up the input before sending to the external server.

This is what happens inside a proxy server bridging data between MySQL and PostgreSQL:

- the proxy server connects to PostgreSQL using the credentials that we provided.

- before issuing any query to PostgreSQL, our middle man does some cleanup:

- removes inverted quotes from table and column names;

- fakes the output of unsupported queries (SHOW TABLE STATUS LIKE 'table_name')

- removes LIMIT clauses from UPDATE/DELETE statements

Think about a common case. You have installed Google Gears, which currently uses SQLite for local storage. If you need to access some of the information without importing into a MySQL table, - which makes a lot of sense, since data stored by Google Gears is likely to be updated frequently - you can then use a Federated table to use the actual data through a Federated table.

Some examples

Let's do it in practice.

First, create the data source in SQLite

$ sqlite3 test.db

SQLite version 3.1.3

Enter ".help" for instructions

sqlite> CREATE TABLE user_list ( user_name varchar(100) not null, info varchar(200));

sqlite> insert into user_list VALUES ('Giuseppe', 'User since 2002 - inserted from SQLite');

sqlite> insert into user_list VALUES ('Joe Hacker', 'User since the beginning - inserted from SQLite');

sqlite> insert into user_list VALUES ('Frank Ly Speakeng', 'User since May 2007 - inserted from SQLite');

sqlite> select * from user_list;

Giuseppe|User since 2002 - inserted from SQLite

Joe Hacker|User since the beginning - inserted from SQLite

Frank Ly Speaking|User since May 2007 - inserted from SQLite

sqlite> .quit

Then we do the same in PostgreSQL

$ psql mydb

Welcome to psql 8.1.8, the PostgreSQL interactive terminal.

Type: \copyright for distribution terms

\h for help with SQL commands

\? for help with psql commands

\g or terminate with semicolon to execute query

\q to quit

mydb=# drop table user_list;

DROP TABLE

mydb=# CREATE TABLE user_list ( user_name varchar(100) not null, info varchar(200));

CREATE TABLE

mydb=# insert into user_list VALUES ('Giuseppe', 'User since 2003 - inserted from PG');

INSERT 0 1

mydb=# insert into user_list VALUES ('Joe Hacker', 'User since the beginning - inserted from PG');

INSERT 0 1

mydb=# insert into user_list VALUES ('Casual User', 'User since January 2006 - inserted from PG');

INSERT 0 1

mydb=# select * from user_list;

user_name | info

-------------+---------------------------------------------

Giuseppe | User since 2003 - inserted from PG

Joe Hacker | User since the beginning - inserted from PG

Casual User | User since January 2006 - inserted from PG

(3 rows)

mydb=# \q

Now, we launch two instances of the proxy server, with the appropriate parameters.

$ sample_proxy 'dbi:SQLite:test.db' 10306 > sqlite.log 2>&1 &

$ sample_proxy 'dbi:Pg:dbname=mydb' 11306 > pg.log 2>&1 &

sample_proxy is a Perl script, based on one of the standard examples provided with the module, which does the changes I've mentioned before. Future versions of DBIx::MyServer should come with a similar script that does the same with less trouble.

The last step is to create the federated tables:

mysql> create table sqlite_users

(user_name varchar(100) not null, info varchar(200))

engine = federated

connection = 'mysql://x:y@127.0.0.1:10306/test/user_list';

Query OK, 0 rows affected (0.11 sec)

mysql> select * from sqlite_users;

+-------------------+-------------------------------------------------+

| user_name | info |

+-------------------+-------------------------------------------------+

| Giuseppe | User since 2002 - inserted from SQLite |

| Joe Hacker | User since the beginning - inserted from SQLite |

| Frank Ly Speaking | User since May 2007 - inserted from SQLite |

+-------------------+-------------------------------------------------+

3 rows in set (0.17 sec)

It works! A table created and served by a foreign DBMS is seen as a MySQL table.

Notice that the only valid reference we are using in the connection string are the host, port, and table name. All the rest is handled inside the proxy server.

Same steps for PostgreSQL:

mysql> create table pg_users

(user_name varchar(100) not null, info varchar(200))

engine = federated

connection = 'mysql://x:y@127.0.0.1:11306/mydb/user_list';

Query OK, 0 rows affected (0.22 sec)

mysql> select * from pg_users;

+-------------+---------------------------------------------+

| user_name | info |

+-------------+---------------------------------------------+

| Giuseppe | User since 2003 - inserted from PG |

| Joe Hacker | User since the beginning - inserted from PG |

| Casual User | User since January 2006 - inserted from PG |

+-------------+---------------------------------------------+

3 rows in set (0.09 sec)

Now, some more interesting queries. Cross-DBMS joins:

Who are the common users in both PostgreSQL and SQLite?

mysql> select pg.user_name

from pg_users pg

inner join sqlite_users lite using (user_name);

+------------+

| user_name |

+------------+

| Giuseppe |

| Joe Hacker |

+------------+

2 rows in set (0.10 sec)

Who are the users of PostgreSQL not using SQLite?

mysql> select pg.user_name

from pg_users pg

left join sqlite_users lite using (user_name)

where lite.user_name is null;

+-------------+

| user_name |

+-------------+

| Casual User |

+-------------+

1 row in set (0.13 sec)

And more. We can insert rows.

mysql> insert into pg_users values ('John Little', 'User from Sheffield forest - inserted from MySQL');

Query OK, 1 row affected (0.23 sec)

mysql> select * from pg_users;

+-------------+--------------------------------------------------+

| user_name | info |

+-------------+--------------------------------------------------+

| Giuseppe | User since 2003 - inserted from PG |

| Joe Hacker | User since the beginning - inserted from PG |

| Casual User | User since January 2006 - inserted from PG |

| John Little | User from Sheffield forest - inserted from MySQL |

+-------------+--------------------------------------------------+

4 rows in set (0.04 sec)

And the inserted rows are available in the original DBMS

$ psql mydb

Welcome to psql 8.1.8, the PostgreSQL interactive terminal.

Type: \copyright for distribution terms

\h for help with SQL commands

\? for help with psql commands

\g or terminate with semicolon to execute query

\q to quit

mydb=# select * from user_list;

user_name | info

-------------+--------------------------------------------------

Giuseppe | User since 2003 - inserted from PG

Joe Hacker | User since the beginning - inserted from PG

Casual User | User since January 2006 - inserted from PG

John Little | User from Sheffield forest - inserted from MySQL

(4 rows)

Other goodies - macros

Another way of using the proxy productively is to convert it into a macro processor.

Think about SQL expressions that you repeat often. Wouldn't be convenient to write, instead of

TABLE_A a INNER JOIN TABLE_B b ON a.x = b.y

a simpler

IJ(TABLE_A, x, TABLE_B, y)

?

Or, instead of

SHOW FULL TABLES FROM dbname

a simpler

ls dbname

?

With a proxy server, this is quite easy. All you need to do is subclass the comQuery() method, check the input text for known macros, and replace them with the intended text before sending it to the server.

This is so simple that I won't even provide an example. I leave it as an exercise for the reader.

More goodies - Customized logging and test generation

Inside the proxy server you have access to all data exchanged between client and server. This is an ideal place to create customized logs.

The actual general log is not so useful in many respects. It records every input, but you have no idea of the output. No way of knowing if a given query was successful or rejected for a syntax error or insufficient privileges.

No way of telling how long the query ran and how many records were returned.

With a proxy server you have an eye at the keyhole of the query factory, so you can provide your own logs in the way you want them. You can store them in a file, or in a remote database server (even a non-MySQL one, if you fancy it).

You can decide which queries to log and in which format, adding information about the time it took to execute, the number of records fetched, and the warnings that were generated.

If an error occurred, you can record that one as well.

And since we are in this unique position, this is the right place to be if we want to generate test cases, which are made of a command script and a list of expected results.

You could run a session like the following:

mysql> START TEST 'SQL_practice' WITH INNODB;

Query OK, 1 row affected (0.0 sec)

# this will start a test script, adding the appropriate 'include' for

# innodb usage

mysql> CREATE TABLE ...

mysql> INSERT INTO TABLE ...

mysql> SELECT x,y,z from tablename

# the output will go to your screen, and to the test result as well

... more commands

... more results

mysql> STOP TEST 'SQL_practice';

Query OK, 1 row affected (0.0 sec)

That will make test composition a bit easier.

Power! - Use OS commands from MySQL

Did the previous section wet your appetite? This one will make you ravenous for power.

What about executing shell commands from MySQL and getting the results on the screen?

For example, wouldn't be cool to do things like this?

mysql> SHELL ls -l /usr/local/mysql/data;

+------------------------------------------------------------------------------------+

| ls -l /usr/local/mysql/data |

+------------------------------------------------------------------------------------+

| total 53364 |

| drwx------ 36 gmax gmax 1224 Jun 12 18:53 bugs |

| -rw-rw---- 1 gmax gmax 8851 Jun 18 12:05 general.log |

| -rw-rw---- 1 gmax gmax 117 Jun 14 18:00 gmac-bin.000001 |

| -rw-rw---- 1 gmax gmax 117 Jun 14 18:09 gmac-bin.000002 |

| -rw-rw---- 1 gmax gmax 1870 Jun 18 12:03 gmac-bin.000003 |

| -rw-rw---- 1 gmax gmax 54 Jun 17 14:37 gmac-bin.index |

| -rw-rw---- 1 gmax gmax 65434 Jun 17 14:38 gmac.err |

| -rw-rw---- 1 gmax gmax 5 Jun 17 14:37 gmac.pid |

| -rw-rw---- 1 gmax gmax 5242880 Jun 17 14:38 ib_logfile0 |

| -rw-rw---- 1 gmax gmax 5242880 Mar 12 22:41 ib_logfile1 |

| -rw-rw---- 1 gmax gmax 44040192 Jun 17 14:38 ibdata1 |

| drwx------ 7 gmax gmax 238 May 28 15:10 myDB |

| drwx------ 53 gmax gmax 1802 Apr 18 11:01 mysql |

| drwx------ 9 gmax gmax 306 May 16 22:24 new_db |

| drwx------ 78 gmax gmax 2652 Jun 12 19:27 qa_contribution |

| drwx------ 35 gmax gmax 1190 Apr 9 15:17 system |

| drwx------ 3 gmax gmax 102 Jun 18 12:03 test |

| drwx------ 6 gmax gmax 204 Mar 20 14:40 testdump |

| drwx------ 6 gmax gmax 204 Jun 12 19:10 web |

| drwx------ 12 gmax gmax 408 Apr 19 18:20 world |

+------------------------------------------------------------------------------------+

21 rows in set (0.01 sec)

or this?

mysql> SHELL df -h;

+--------------------------------------------------------+

| df -h |

+--------------------------------------------------------+

| Filesystem Size Used Avail Use% Mounted on |

| /dev/disk0s2 149G 50G 99G 33% / |

| devfs 1.0k 1.0k 0 100% /dev |

| fdesc 1.0k 1.0k 0 100% /dev |

| <volfs> 512k 512k 0 100% /.vol |

+--------------------------------------------------------+

5 rows in set (0.47 sec)

You could actually interface this with DBD::CSV and present the output in tabular way, but just to give you an example of a quick and easy way of interfacing MySQL with the outside world, here is what you need to do in your code. Subclassing comQuery() will be sufficient for intercepting the shell queries and return the results.

package MyProxy;

our @ISA = qw(DBIx::MyServer::DBI);

sub comQuery {

my ($self, $text, @args) = @_;

if ( $text =~ /^\s*SHELL(.*)/) {

my $command = $1;

my @definitions = (DBIx::MyServer::newDefinition(

undef, name => $command));

my $result = qx/$command/;

my @lines = map { [ $_ ] } split /\n/, $result;

return ($text, \@definitions, \@lines );

}

# gets result from parent

my @result = DBIx::MyServer::DBI::comQuery($self, $text, @args);

return @result;

}

Caveats - Security risks

Of course, all these features will introduce quite a lot of security problems. Don't use macros or OS calls outside your administration environment. Putting this little toy in a web application exposed to the internet would be asking for trouble.

Current limitations

The server is pretty functional as it is today. But it has some rough edges that the author is going to level in the next version, to make it easier to use with Federated and to accept multiple connections. This latter is the biggest limitation of the examples I have provided. Making a full multi-threaded server is not difficult, but not that easy either. The next version should take care of that.

Summing up

Using a proxy server can enhance the features of your server beyond your wildest dreams.

It allows you to use external data sources transparently, to implement macros, write tests on the fly, execute shell commands, and perhaps more that didn't come to mind at the moment.

If you find some more useful applications, or if you want to comment on the ones I suggested, please comment (my_first_name@mysql.com).

Thanks

The world is full of proxies

Just when I finished writing this article, another proxy was announced, the official one. MySQL Proxy is a new product with interesting features. A lightweight proxy server with an embedded LUA interpreter, it will change substantially the way we use the MySQL server. You will hear more about that very soon!

Comments?

Read and post comments on this article in the MySQL Forums. There are currently 1 comments.